Heubach used to collect data from PID controllers in a central PIMS (production information management system) database. According to industry insiders, many chemical and pharmaceutical manufacturers accumulate such data, but it then usually remains unused on servers. Production data is often stored in condensed form – in the case of PID controllers with sampling rates of two seconds.

Optimising locations worldwide

Full-fledged controller optimisation is probably not possible with such data. Nevertheless, experts from Heubach and Festo wondered whether the centrally accessible data could be put to good use. After all, PIMS databases have an invaluable advantage: they contain the data of all controllers from different systems, different manufacturers and from all connected production sites. Michael Pelz, Automation & Digitisation Manager at Heubach, says "Our goal would be a central controller monitoring system. In order to achieve this, we would have to succeed in meaningfully evaluating the data in context and making it usable, thus creating a uniform instrument with which we can analyse the controllers of all connected locations worldwide." Based on the results obtained, controllers could then be specifically analysed and optimised, for example with optimisation software in the Scada system used and with experts on site.

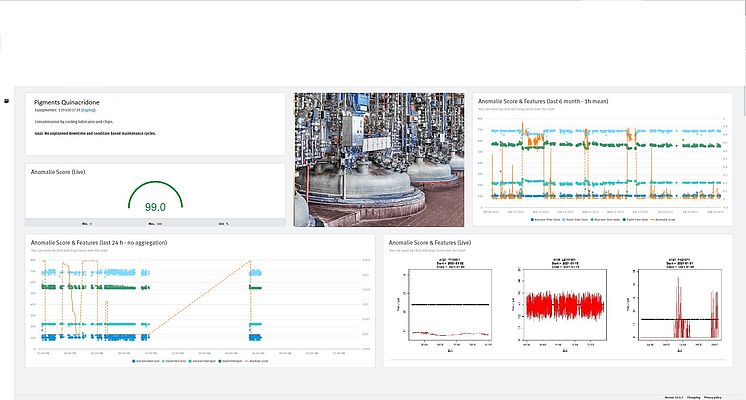

As part of the solution, it became apparent for Heubach to use the Festo Automation Experience (Festo AX) software. "It helps our customers to make decisions based on facts," emphasises digitalisation expert Eberhard Klotz from Festo. Festo AX is a flexible and easy-to-use solution that enables users to extract maximum value from machine and plant data through artificial intelligence (AI) and machine learning.

AI evaluates source data

In the first step, Festo AX's AI – as a pragmatic approach – evaluated several months of baseline data and cleaned it if necessary. Experts from Heubach's Maintenance, Process Engineering (Quality) and Operations Improvement Management departments accompanied this step and evaluated the initial results from the historical offline data.

In the second step, the specialist departments reported back regarding the variable batch processes, which sensitivity should be possible for the controllers, depending on their physical functions. For example, it was assessed whether the temperature factor was less critical than the flow factor or the pressure factor. Subsequently, it was determined how the different error deviations should be prioritised. An additional trend line of the anomaly scores should also help the departments to identify the deviations per batch accurately, early and, above all, easily.

This should make algorithms and error scores adaptable within the AI evaluation, taking into account the experience of experts and conditions on site. It was also important to implement a simple visualisation of the results, with the aim of being able to use aggregated dashboards in the future. In the third step, the project should be portable (on premise) and be able to lead to an online query including visualisation within existing systems, which greatly simplifies the implementation from a data security point of view.

Optimal adaptation of the algorithm

"The results were already amazingly good after the first step of the AI analysis: it was very easy to see which controllers, for example, exhibited a strong fluctuation in the manipulated variable and thus caused the actuators to wear out more quickly," explains digitisation expert Pelz. In addition to many well-adjusted and inconspicuous controllers, the AI also found controllers that constantly failed to reach the setpoints, overshot strongly, oscillated or were subject to manual intervention. With some controllers, however, the results were not always immediately comprehensible due to the different areas of application. "In a close exchange between controller and data science experts, the AI algorithm could be adapted to other special features in (batch) production. For the pragmatic and quick exchange of results, only classic office tools were actually sufficient in this phase," adds Pelz.

Since we have different process control systems in use, a controller analysis was previously only ever possible at plant level and with different tools. "But with this solution we have two outstanding advantages: Firstly, it is cross-manufacturer and can be used globally on a central system, so that we can use the algorithm for all systems and controllers. Secondly, the Festo solution is so flexible on the IT side that it can be used and further developed from a pragmatic offline pilot project to an on-premise solution within our own IT systems to a cloud-based implementation," says the Automation & Digitisation Manager from Heubach.

Mastering current and future challenges more effectively thanks to AI

"Especially in the current times, it is important to be able to optimise production processes as effectively as possible in terms of energy consumption, quantity and quality and to monitor them in the long term. In the future, the central controller monitoring system can be an important building block here, especially in the context of the advancing optimisation of the CO2 footprint in production," says digitalisation expert Pelz.